|

Google Scholar | Experience | Publications | Projects | Awards | GSoC |

|

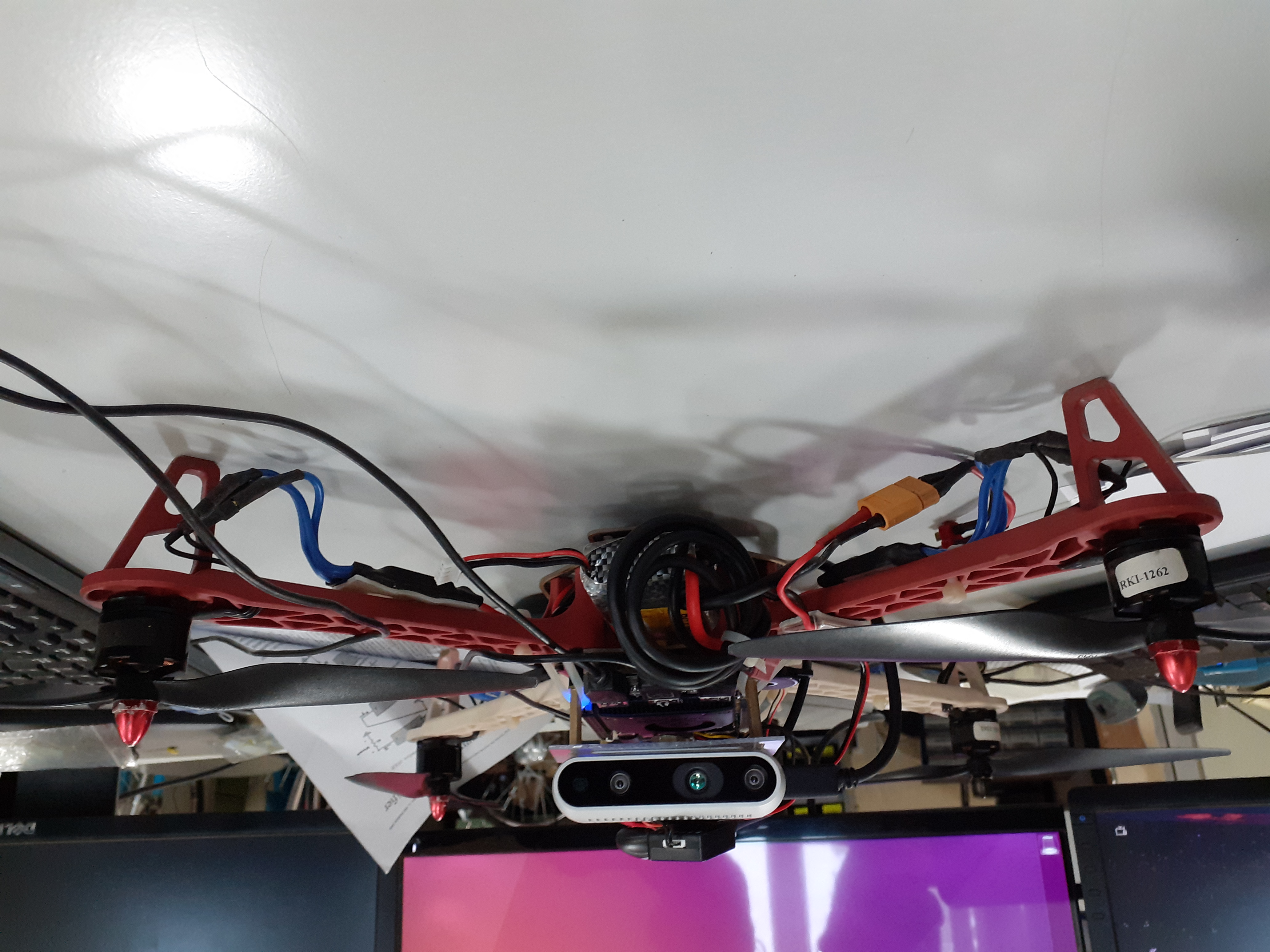

I'm a Machine Learning Engineer at Cobot where I work on developing perception algorithms for our robot - Proxie. My mission is to develop multi-modal foundation models and perception pipelines that act as robust representational priors for generally capable agents. I graduated from Georgia Tech, where I worked on scaling generalizable bimanual manipulation policies using ego-centric human play data advised by Danfei Xu. I have an undergraduate degree in electronics from BITS Pilani where I was the recipient of the Innovator of the Year Award. As part of my undergraduate thesis, I worked on resource-aware visual-inertial odometry algorithms at the Autonomous Robots Lab advised by Kostas Alexis. Prior to my current role, I worked at MathWorks in the computer-vision team developing vision-foundation models. As part of the Google Summer of Code '22 program, I worked as an open-source developer on the project Landmark Mapping using Quantized EfficientDet on Edge TPUs. I've spent time at MathWorks, Addverb Technologies and KPIT working on developing software for robots and self-driving cars. I am an aviation enthusiast and love building and flying quadcopters. Apart from aviation, I love cycling, reading, playing football, amateur photography and sketching. |

|

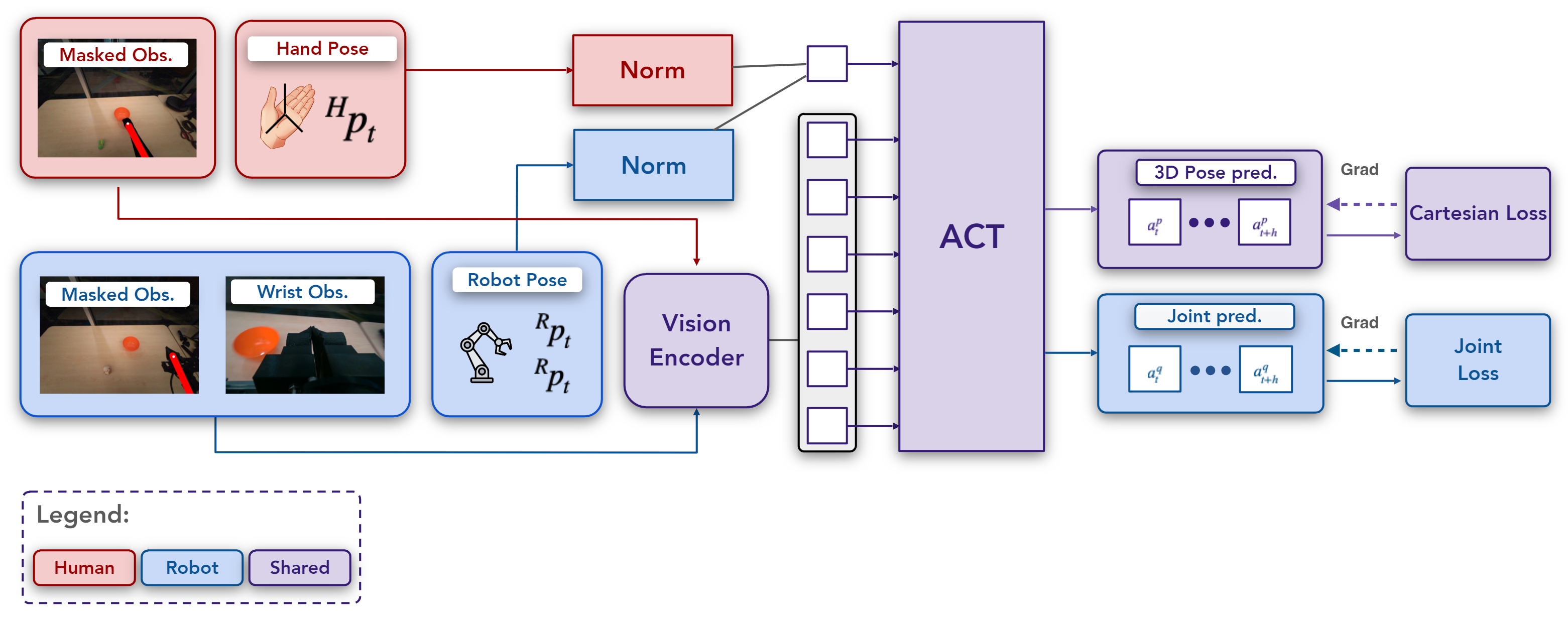

[Paper] [Website] Simar Kareer, Dhruv Patel*, Ryan Punamiya*, Pranay Mathur*, Shuo Cheng, Chen Wang, Judy Hoffman, Danfei Xu Accepted to IEEE Internatinal Conference on Robotics and Automation (ICRA) 2025 X-Embodiment Workshop, Conference on Robot Learning (CoRL) 2024 |

|

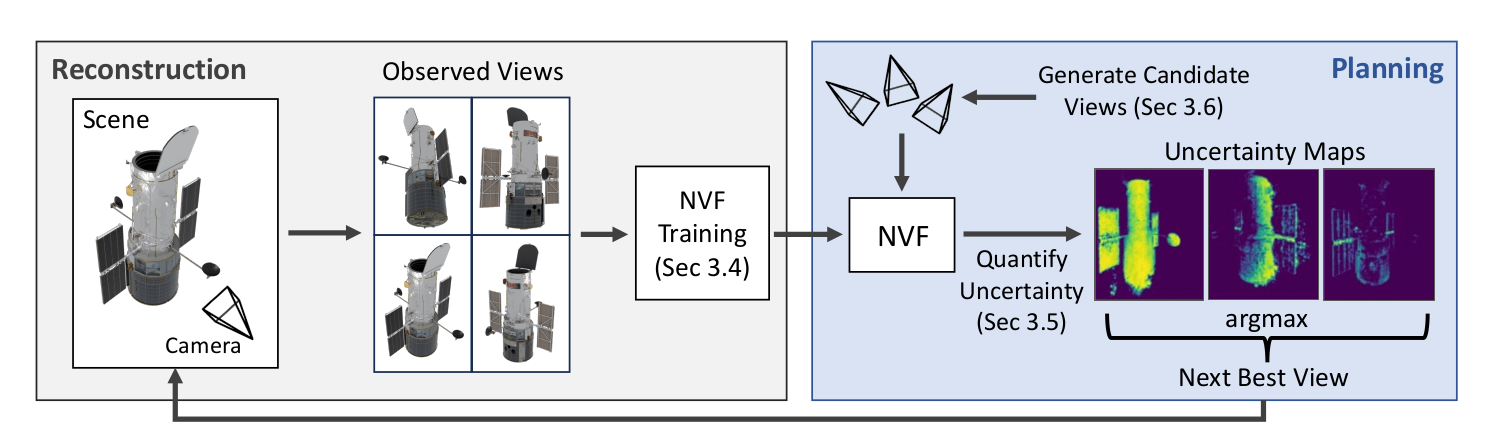

[Paper] [Website] Shangjie Xue, Jesse Dill, Pranay Mathur, Frank Dellaert, Panagiotis Tsiotras, Danfei Xu IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR) 2024 |

|

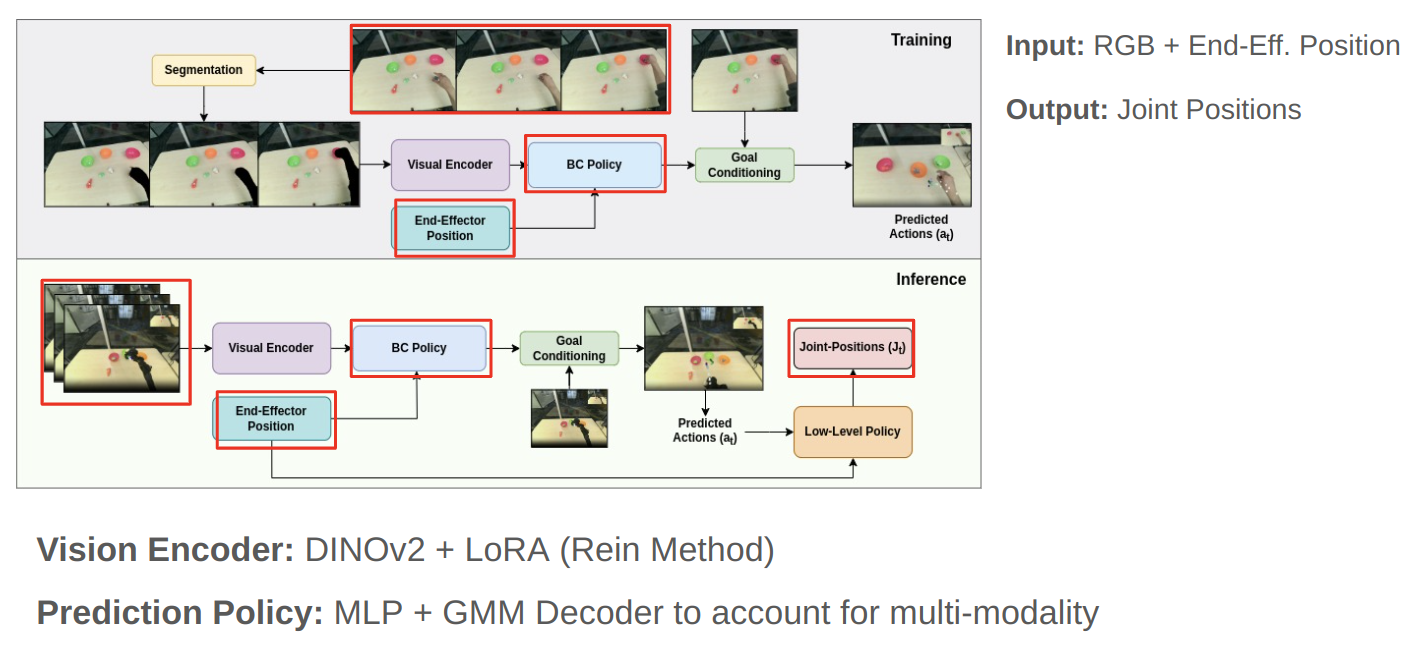

[Paper] [Video] [Poster] [Best Paper Award] Pranay Mathur IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) - Geriatronics AI Workshop 2023 |

|

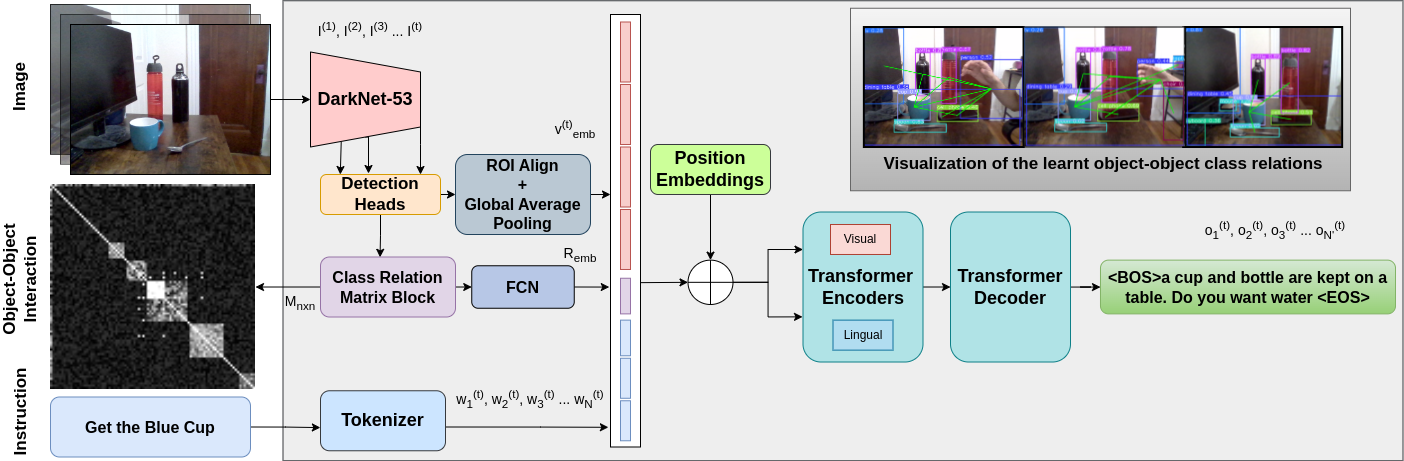

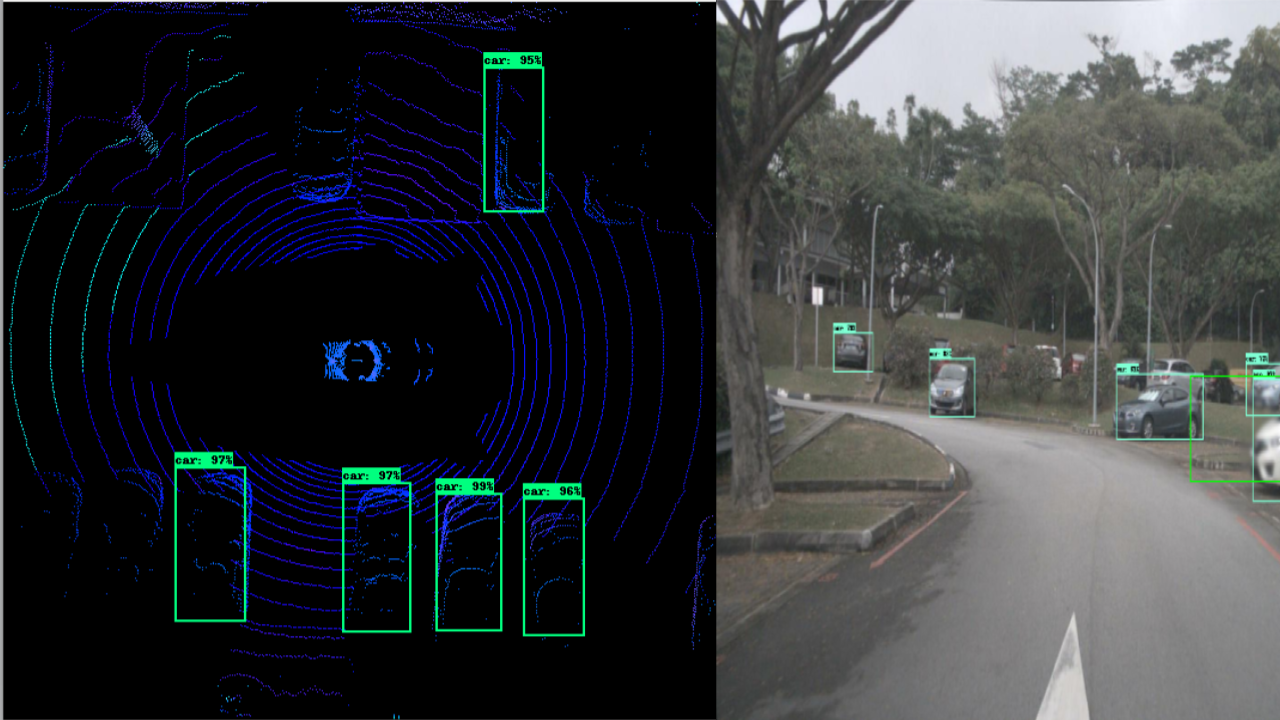

[Paper] Pranay Mathur, Rajesh Kumar, Sarthak Upadhyay, arXiv 2022 |

|

[Paper] [Video] Pranay Mathur, Nikhil Khedekar, Kostas Alexis, IEEE-RAS International Conference on Advanced Robotics 2021 |

|

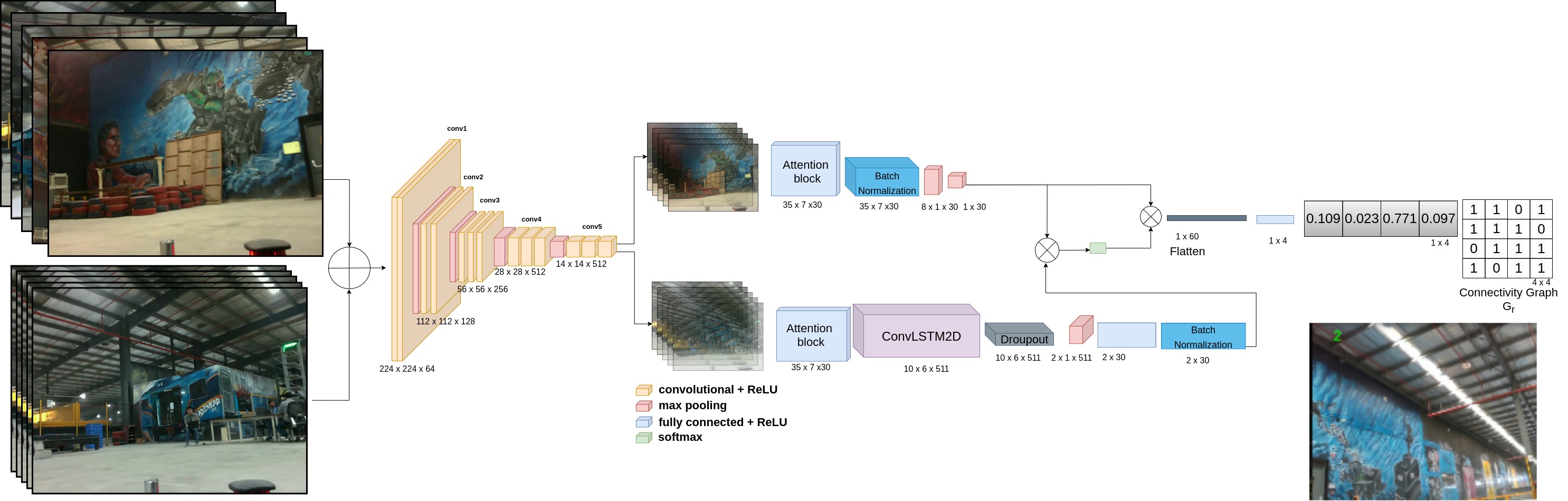

[Paper] [Code] [Best Paper Award] Pranay Mathur, Yash Jangir, Neena Goveas, IEEE International Symposium of Asian Control Association on Intelligent Robotics and Industrial Automation 2021 |

|

[Paper] [Code] Pranay Mathur, Ravish Kumar, Rahul Jain, Springer International Conference on Machine Learning and Autonomous Systems 2021 |

|

[Paper] [Code] Kshitij Chhabra, Pranay Mathur, Veeky Baths, IEEE International Conference on Systems, Man and Cybernetics 2020 |

|

|

|

|

|

|

|

|

|

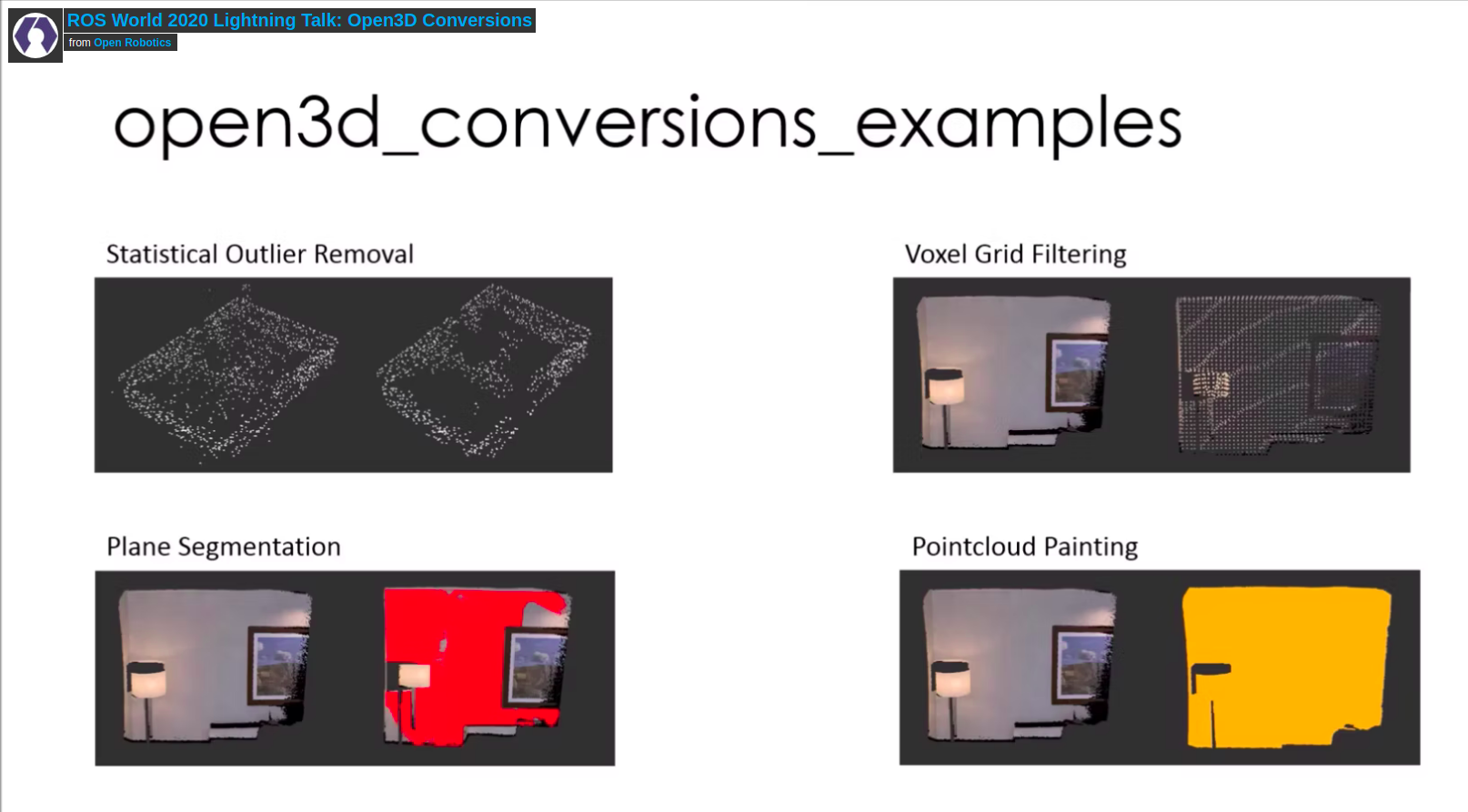

[ROS World Lightning Talk] [Code] |

|

|

|

|

|

|

Jan '25 - present

|

|

June '24 - Jan '25

|

|

May '23 - Aug' 23

|

|

June '22 - Sept'22

|

|

August '21 - July '22

|

|

January '21 - August '21

|

|

July '20 - January '21

|

|

May '20 - July '20

|

|

May '19 - July '19

|

|

Aug '19 - Dec '19

|

Click here to view my awards and media spotlight

This website is a modification of Jon Barron's website. You can find the source code here